Track the trends: Is it AI or is it authentic?

VISIT DASHBOARDWelcome back to our blog series focused on the Misinformation Dashboard: Election 2024, a tool for exploring trends and analysis related to falsehoods regarding the candidates and voting process.

The prevalence of content generated by artificial intelligence has made it more difficult to differentiate between fact and fiction. Widely available AI tools allow people to create photo-realistic but entirely fabricated images, but how often are these fakes being passed off as genuine and used to spread misinformation and what can we do to identify and debunk them?

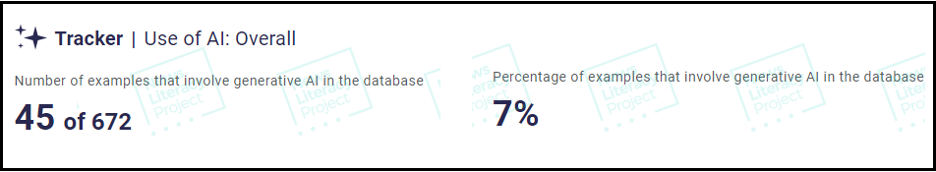

One of the goals of the News Literacy Project’s Misinformation Dashboard: Election 2024 is to monitor the use of AI-generated content to spread falsehoods about the presidential race. Now, with about 700 examples cataloged, let’s look at the impact.

Not the main source of misinformation

Researchers have long warned that AI-generated content could release a flood of falsehoods. And while there has certainly been an uptick in these fabrications, their use in election-related rumors has not been as widespread as predicted. NLP has found that AI-generated content has been used in only about 7% of the examples we’ve documented.

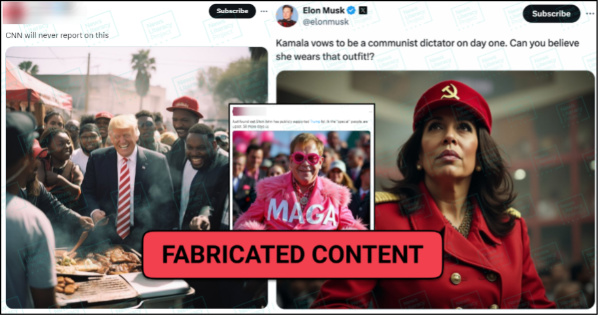

Completely fabricated images of former President Donald Trump, President Joe Biden or Vice President Kamala Harris make up most of the AI-generated content in NLP’s database. These digital creations can be used to bolster a candidate’s reputation — such as fabricated images showing Biden in military fatigues or Trump praying in a church — or to denigrate their character — like fabricated images of Harris in communist garb or Trump being arrested.

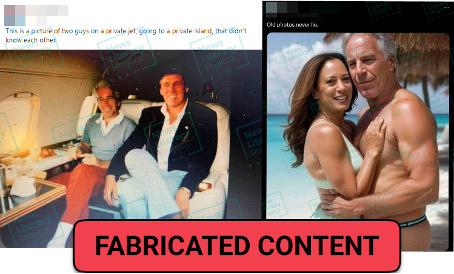

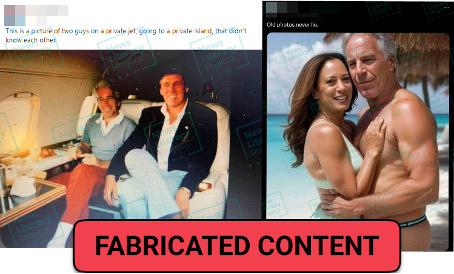

AI can be used to make “photos” that distort a candidate’s relationship with certain groups or people. NLP’s database includes several AI-generated images depicting Trump with Black voters, as well as a number of images that falsely depict candidates posing with criminals or dictators, such as the late convicted sex offender Jeffrey Epstein:

The impact of AI: Question everything

Generative AI technology is behind a concerning trend in how people approach online content: fostering cynicism and “deep doubt.” It has led to a prevailing belief that it is impossible to determine if anything is real. This attitude is now being exploited by accounts falsely claiming that genuine photographs are AI creations.

When enthusiastic crowds turned out at rallies for Harris in August, falsehoods circulated online attempting to downplay the support by claiming that the visuals were AI-generated. This same tactic was used after a photograph emerged that showed a group of extended family members of vice-presidential nominee Tim Walz offering their support to Trump. The photo of Walz’s second cousins — not his immediate family — was authentic, although efforts were made to dismiss it as AI-generated.

Low-tech solutions to high-tech problems

While the existence of AI fakes may make it feel like it is impossible to tell what is real, there is some good news. Whether a photograph is manipulated with computer software, an image is fabricated with an AI-image generator or a piece of media is shared out of context, the methods to identify, address and debunk these fakes remain the same.

- Consider the source. Who is sharing the content, and do they have a track record of posting accurate information in good faith?

- What is the original source of a photo? The lack of a credit is a red flag. Look for supporting evidence. Have any standards-based news outlets also shared the image?

When it comes to AI images, you may not be able to trust your own eyes. Slowing down to consider these key pieces of context is the best way to approach any content online — whether it’s real or created by AI.

Visit the Misinformation Dashboard: Election 2024

You can find additional resources to help you identify falsehoods and recognize credible election information on our resource page, Election 2024: Be informed, not misled.