Hoaxes, conspiratorial nonsense, doctored poll results

This week’s rumor roundup runs the gamut of types of misinformation.

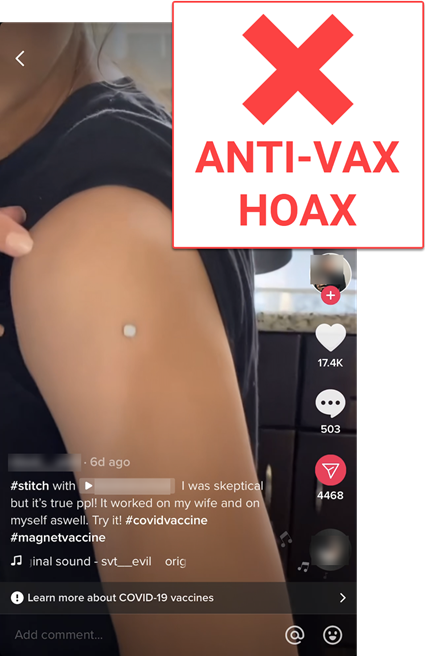

NO: COVID-19 vaccines do not magnetize your arm at the injection site. YES: This is a baseless hoax based on the widely debunked conspiracy theory that the vaccines contain microchips. NO: None of the available vaccines in the United States and Canada — including Pfizer, Moderna, Johnson & Johnson and AstraZeneca — list any metal-based ingredients, according to AFP Fact Check.

Related:

- “Fact Check-‘Magnet test’ does not prove COVID-19 jabs contain metal or a microchip” (Reuters Fact Check).

- “Covid-19 vaccines do not make you magnetic” (Sarah Turnnidge, Full Fact).

- “No, getting a COVID-19 vaccine won’t expose you to high amounts of electromagnetic radiation” (Madison Czopek, PolitiFact).

NO: Black Lives Matter did not say it “stands with Hamas,” the militant group that controls the Gaza Strip. YES: The organization said, in a May 17 tweet, that it “stands in solidarity with Palestinians.” YES: Fox News published the erroneous headline in the above screenshot. YES: The headline was later “updated to more closely reflect the Black Lives Matter tweet,” Fox said in an editor’s note.

Note: The inaccurate headline was picked up by a number of other outlets.

Tip: Be wary of screenshots of news coverage that are not accompanied by a link to the actual story.

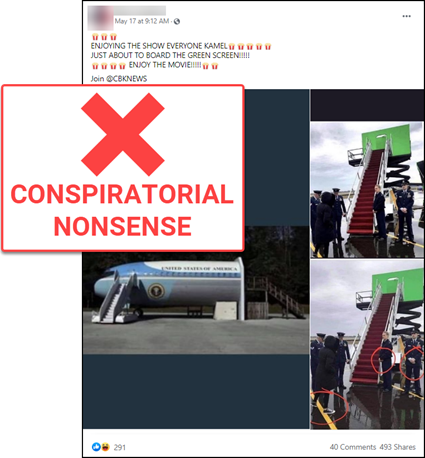

NO: These photos do not show Vice President Kamala Harris pretending to board Air Force One using a replica plane and a green screen. YES: They show (at left) a partial replica of Air Force One at a Secret Service training facility in Maryland and (at right) a green screen that was part of a shoot for the television show Designated Survivor in 2017.

Note: Rumors about Biden administration activities being faked or staged are offshoots of the QAnon conspiracy belief system, which includes the delusion that former President Donald Trump is secretly still president. QAnon followers contend the Biden administration is merely a performance and commonly encourage one another to “enjoy the show” — often including popcorn emojis — in reference to what they believe is the final act of an elaborate scheme to prepare the public for Trump’s apocalyptic return to power.

Related: “No, Biden wasn’t ‘computer generated’ in a March interview” (Ciara O’Rourke, PolitiFact).

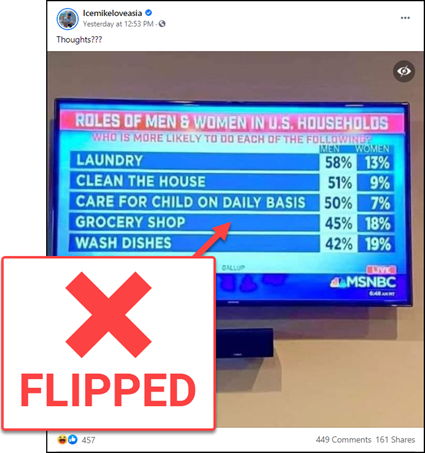

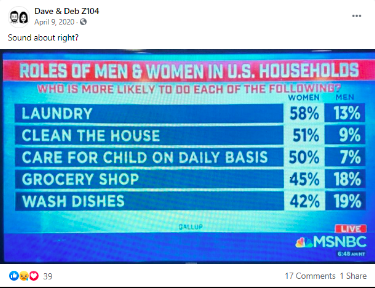

NO: A Gallup poll of heterosexual couples who are married or living together did not find that men are more likely than women to do common household chores. YES: The results for men and women listed in this graphic reverse the actual findings published by Gallup in January 2020. YES: Another Facebook post containing a screenshot of this graphic — purportedly from MSNBC, with the same time stamp in the bottom right corner — displays the results correctly:

An April 9, 2020, Facebook post shared by two DJs at a country music radio station in Utah shows the same MSNBC graphic with the correct results of the Gallup poll.

Rumor Rundown: COVID-19 scare tactics, Middle East misinfo and more

This week’s selection of viral rumors plays on people’s fears and exploits partisan beliefs.

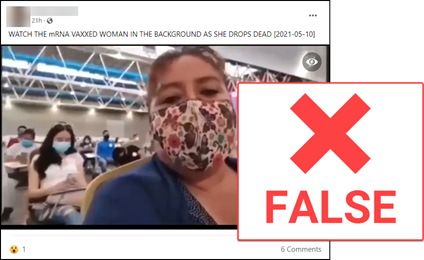

NO: The woman in the background in this video of a vaccination clinic in Mexico did not die after receiving a COVID-19 vaccine. YES: She fainted.

Tip: Be wary of posts that seek to connect isolated incidents with COVID-19 vaccines. Anti-vaccination activists continue to use coincidental events, including celebrity deaths, and misleading or out-of-context videos to spread fear and falsehoods about COVID-19 vaccines.

Related:

- “Facebook races to remove anti-vaccine profile picture frames” (Lauren Feiner, CNBC).

- “Just 12 People Are Behind Most Vaccine Hoaxes On Social Media, Research Shows” (Shannon Bond, NPR’s All Things Considered).

- “Distancing from the vaccinated: Viral anti-vaccine infertility misinfo reaches new extremes” (April Glaser and Brandy Zadrozny, NBC News).

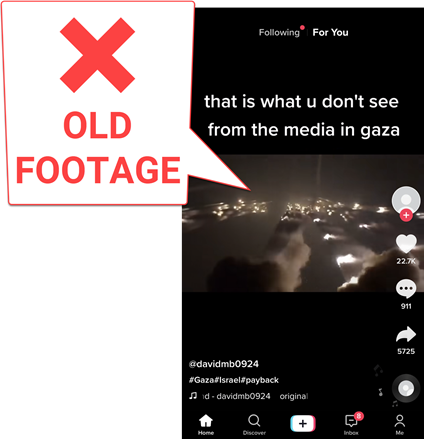

NO: The video in this tweet does not show Hamas militants firing rockets from populated areas in the Gaza Strip in May 2021. YES: This video has been online since at least June 2018 and was claimed to be related to the conflict in Syria. YES: The above tweet was posted by Ofir Gendelman, Israeli Prime Minister Benjamin Netanyahu’s spokesperson. YES: It has since been deleted.

Note: This video was also used out of context in a December 2019 tweet that claimed it was footage from Tripoli, Libya.

Tip: Photos and videos of rockets being fired, particularly at night, are easy to pass off out of context.

NO: This TikTok video does not show a barrage of rockets being fired at or from Gaza in May 2021. YES: This video appears to show a test of a multiple launch rocket system (MLRS) and has been online since at least November 2018.

Note: This clip also went viral out of context in January 2020 after Iran fired ballistic missiles at military bases housing U.S. troops in Iraq.

Related: “As violence in Israel and Gaza plays out on social media, activists raise concerns about tech companies’ interference” (Antonia Noori Farzan, The Washington Post).

NO: The U.S. Census Bureau did not confirm any conflicts or problems related to the number of voters in the 2020 presidential election. NO: Census figures do not show that there was “a discrepancy of nearly four million votes” in the election. YES: The total number of votes in the 2020 election exceeded the number of people who reported to the Census Bureau that they had voted. YES: More than 36 million people age 18 and older did not tell the Census Bureau whether or not they voted in the election. YES: Researchers previously have found mismatches between people who say they voted and their actual voting record.

Related: “‘A Perpetual Motion Machine’: How Disinformation Drives Voting Laws” (Maggie Astor, The New York Times).

Misleading memes and engagement bait

This week’s selection of viral rumors includes common subjects: celebrities, photos from space and frightening content as engagement bait.

NO: LeBron James did not say he didn’t want anything to do with white people. YES: In the first episode of the HBO show The Shop in 2018, James shared that he was initially wary of white people at his predominantly white Catholic high school in Akron, Ohio. YES: In telling this story on The Shop, James said [link warning: profanity], “when I first went to the ninth grade … I was so institutionalized, growing up in the hood, it’s like … they don’t want us to succeed … so I’m like, I’m going to this school to play ball, and that’s it. I don’t want nothing to do with white people. I don’t believe that they want anything to do with me.” YES: The conversation went on to clarify that these initial feelings soon changed as sports and basketball created friendships.

Note: This misleading quote has gone viral several times before. It recently recirculated after James tweeted a photo of a police officer who was identified as firing the shots that killed Ma’Khia Bryant in Columbus, Ohio, on April 20, along with the message “YOU’RE NEXT #ACCOUNTABILITY.” James later deleted the tweet.

Related:

- “Did Lebron James Say He Wants Nothing To Do With White People?” (Dan Evon, Snopes).

- “Viral image uses doctored photo to paint LeBron James as pro-China” (Andy Nguyen, PolitiFact).

NO: This is not a photo of Saturn. YES: It’s an artistic rendering of the imagined view from the Cassini spacecraft during one of its final, close passes over Saturn in 2017.

Tip: Fake or doctored photos supposedly from space are a common type of “engagement bait” online.

Resource: Reverse image search tutorial (NLP’s Checkology® virtual classroom).

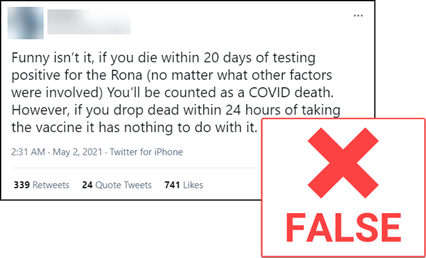

NO: COVID-19 is not automatically declared the cause of death for anyone who dies within 20 days of testing positive for the virus. YES: The cause of death in the United States is determined by local coroners, medical examiners, and other officials across more than 2,000 independent jurisdictions, according to the president of the National Association of Medical Examiners, who was interviewed by Lead Stories. NO: The Centers for Disease Control and Prevention does not control death certificate decisions and has no authority to overrule local medical examiners. NO: There is no conspiracy to falsely inflate the number of COVID-19 deaths.

Related: “How COVID Death Counts Become the Stuff of Conspiracy Theories” (Victoria Knight and Julie Appleby, Kaiser Health News).

NO: This video is not live footage of a gas station explosion. YES: This May 7 post on Facebook appears to use video footage from 2014 of a fire exploding at a gas station in Russia, according to the fact-checker Lead Stories.

Note: This is another example of “engagement bait.”

Tip: You can use reverse image search to look for the origin of videos by taking screenshots of different video frames.

Related: “How to find the source of a video (or, how to do a reverse video search)” (Gaelle Faure, AFP Fact Check).

Rumor rundown: Partisan falsehoods, logical fallacy, doctored content

Falsehoods about U.S. politicians and COVID-19 continue to go viral. A flawed study about violence against women and doctored content gained traction on social media by appealing to our emotions and beliefs. Below, we explain why these posts are actually misinformation and should not be believed, liked, shared or otherwise amplified.

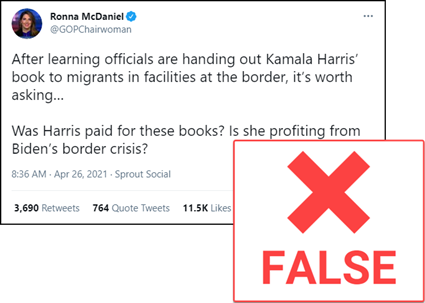

NO: Officials at a facility for migrant children brought from the U.S.-Mexico border were not handing out a children’s book written by Vice President Kamala Harris. YES: A copy of Harris’ 2019 book Superheroes Are Everywhere was donated during a book and toy drive to an “emergency intake site” for unaccompanied migrant children at the Long Beach Convention and Entertainment Center. YES: The baseless claim that copies of the book were included in welcome kits at taxpayers’ expense and distributed to each child at the site originated with an erroneous report published by the New York Post on April 23. YES: Fox News — which, like the Post, is owned by the Murdoch family — also published the report. YES: The Post eventually added an editor’s note at the end of the story to update it with a correction after it had spread widely on social media. YES: Fox News also made updates and corrections to its report.

Note: The false assertion that the book was being given to each child at the facility appears to have been based on a photo of a single donated copy left on a cot.

Also note: Laura Italiano, the Post reporter who wrote the original report, announced her resignation from the paper on Twitter on April 27 and claimed she was “ordered to write” the false report. The Post issued a statement denying Italiano’s claim.

Related:

- “How a photo and a Long Beach book drive led to a false story and attacks on Kamala Harris” (Erin B. Logan, Los Angeles Times).

- “New York Post Reporter Who Wrote False Kamala Harris Story Resigns” (Michael M. Grynbaum, The New York Times).

- “Fox News host admits his show was wrong about Biden limiting red meat consumption” (Daniel Dale, CNN).

Idea: Have students evaluate the steps the New York Post and Fox News took to correct the false report. Were the corrections sufficiently clear? Did the organizations hold themselves accountable for the error? Did they explain how it occurred?

NO: 85% of Americans did not approve of President Joe Biden’s April 28 speech to a joint session of Congress — the first of his presidency. YES: According to a CBS News / YouGov poll, 85% of American speech-watchers — only 18% of whom identified themselves as Republicans — approved of it.

Note: As CNN’s chief media correspondent Brian Stelter noted, the television ratings for Biden’s speech were significantly lower than similar speeches by past presidents. But such ratings do not necessarily reflect the total reach of the speech because they can’t take internet and other viewers into account.

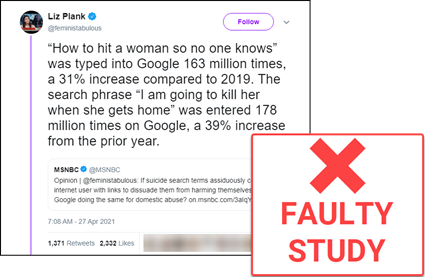

NO: The two violent phrases in this tweet were not “typed into Google” 163 million and 178 million times, respectively, over several months in 2020. YES: These figures that claimed a big increase in such searches from the prior year were based on a flawed study. YES: Searching these phrases returns several billion search results (the number of webpages a given search returns) but these should not be mistaken for search trends (the frequency of specific searches over time). YES: An analysis by the fact-checking website Snopes (linked above) using the Google Trends search tool determined that almost “no one used the website to look for information on ‘how to hit a woman so no one knows,’ including during the time window referenced in the study (March to August 2020).”

Note: Violence against women is alarmingly common. According to the World Health Organization, about 30% “of women worldwide have been subjected to either physical and/or sexual intimate partner violence or non-partner sexual violence in their lifetime.”

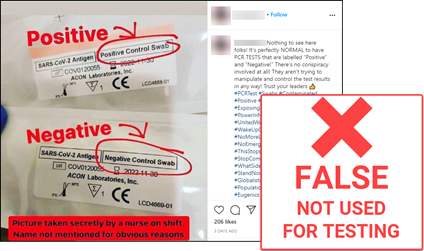

NO: These are not rigged COVID-19 tests. YES: They are control swabs included with some test kits that ensure they are working properly.

Note: The claim that this photo was “taken secretly by a nurse” is an example of an appeal to authority, a logical fallacy in which the opinion or endorsement of an authority is used as evidence to support an assertion. Because the nurse isn’t named, this example could also be classified as an appeal to anonymous authority (also known as an appeal to rumor).

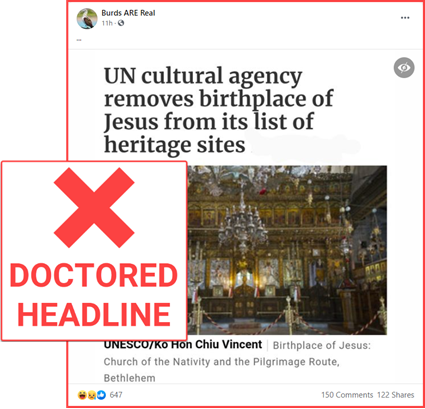

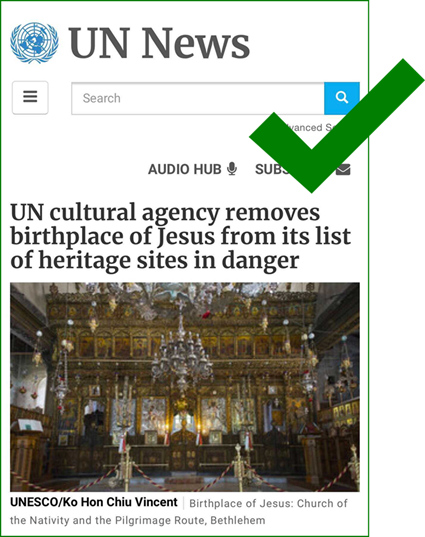

NO: The United Nations Educational, Scientific and Cultural Organization (UNESCO) did not remove the Church of the Nativity, officially recognized as the birthplace of Jesus, from its World Heritage List. YES: The screenshot in this Facebook post has been doctored to conceal the last two words in the actual headline: “in danger.” YES: UNESCO in 2012 added the site to its List of World Heritage in Danger because it had been damaged by water leaks. YES: The church was removed from the “in danger” list in 2019.

A screenshot of the 2019 report published by UN News showing the actual headline, which includes the words “in danger” at the end. A doctored version that recently went viral obscured these two words and generated outrage.

Image of flamingos in canal was artwork

A piece of digital artwork of flamingos in Venice’s Grand Canal was mistaken as authentic and shared on social media:

NO: This photo of flamingos in the Grand Canal in Venice, Italy, is not authentic. YES: It is a piece of digital artwork that was posted to Instagram on April 24.

Note: The verified Instagram account that shared it belongs to Kristina Makeeva, a photographer and digital artist in Moscow, who posts as “hobopeeba.” In reply to people who asked if the flamingo photo was real, she replied “no.”

Related: “The Animal Fact Checker” (Savannah Jacobson, Columbia Journalism Review)

Pence victim of PPE delivery hoax

This viral rumor drew a good deal of attention after a select clip was featured on Jimmy Kimmel Live!, and other prominent people amplified the false story before it was debunked:

This viral rumor drew a good deal of attention after a select clip was featured on Jimmy Kimmel Live!, and other prominent people amplified the false story before it was debunked:

NO: Vice President Mike Pence did not deliver empty boxes to the Woodbine Rehabilitation & Healthcare Center in Alexandria, Virginia, on May 7, pretending they were full of personal protective equipment (PPE). YES: Pence did deliver boxes filled with PPE to the nursing home. NO: Pence was not “caught on hot mic” admitting that the boxes were empty, as this tweet from Matt McDermott, a political strategist, claims.

YES: After the boxes of PPE were delivered, an aide told Pence that the remaining boxes in the van were empty, and the vice president made a joke about moving them “just for the camera.” YES: Jimmy Kimmel, host of the late-night talk show Jimmy Kimmel Live!, aired a “selectively edited” (as a campaign spokesman for Pence put it) clip of the delivery on his show that night, then tweeted the clip the next morning. YES: The claim that Pence’s team had staged the delivery went viral — and was amplified by a number of prominent media figures — before being debunked.

Images of protest signs often doctored

Protestors recently staged demonstrations in states that issued stay-at-home orders and business closures in efforts to slow the spread of COVID-19. Since then, images of protest signs with doctored messages have been circulating on social media.

NO: The protest sign in this tweet is not authentic. YES: The sign actually said “Give me liberty or give me death” (h/t @jjmacnab). YES: The photo — taken on April 17 in Huntington Beach, California — shows people protesting statewide stay-at-home orders.

Note: The photo, by Jeff Gritchen of The Orange County Register, is included in the gallery at the top of this report on the protest (possible paywall).

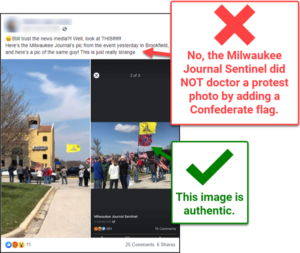

NO: The Milwaukee Journal Sentinel did not digitally add a Confederate flag to a photo of a protest against a recent extension of the stay-at-home order in Wisconsin. YES: The man holding a pole flying a Gadsden flag (“Don’t tread on me”) above a Confederate flag can be seen (at around the 0:20 mark) in a video of the protest posted to Facebook. YES: Another man wearing a nearly identical plaid shirt was holding another pole with a Gadsden flag.

Related:

- “No, a Journal Sentinel image of Confederate flag at Brookfield rally was not doctored, as false accusations on Facebook claim” (Milwaukee Journal Sentinel).

- National Press Photographers Association Code of Ethics.

Discuss: What standards and ethics policies relating to photos do quality news organizations strive to abide by? What kinds of alterations to photos are ethical and allowed at standards-based news organizations? What kinds are not? What kinds of consequences might photojournalists face if they are caught breaching those standards?

Idea: Invite a photojournalist from a local news outlet to discuss photojournalism ethics and standards with your students.

Use digital forensics tools to verify images

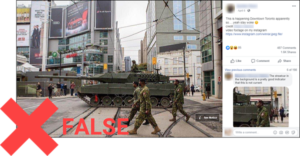

A photo of a tank in downtown Toronto is circulating on social media with a caption suggesting was taken during the stay-at-home order. But is it really a demonstration of military enforcement of order?

A photo of a tank in downtown Toronto is circulating on social media with a caption suggesting was taken during the stay-at-home order. But is it really a demonstration of military enforcement of order?

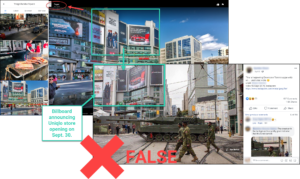

NO: It was not taken this month — or even this year. YES: Canadian Armed Forces Operations posted it on Twitter on Oct, 1, 2016, when members of the Canadian military arrived at Yonge-Dundas Square for Nuit Blanche, an all-night arts event. YES: On April 5, Toronto-area residents were advised “to expect a larger number of [military] personnel and vehicles” on the roads in the next few days as units relocated to a base about 60 miles from the city to set up a COVID-19 response task force.

Idea: Share an archived version of this post with students and give them a sequence of digital verification challenges, including:

- Determining whether the claim (that the photo was taken in 2020) is true. (It’s not.)

- Finding the original tweet from the Canadian military (linked above).

- Finding the precise location using Google Street View.

If you want to make the challenge even harder, ask students to use different ways to prove that the image is not from 2020. For example, they might use critical observation skills to note that one of the billboards in the background, though partially cut off, announces the opening of a Uniqlo retail store on Sept. 30. A quick lateral search for “Uniqlo store opening Toronto Sept. 30” should produce results confirming that the chain announced Sept. 30 as the opening day for a store in CF Toronto Eaton Centre. Or students might try to use the billboards in another way: If they can find another photo of Yonge-Dundas Square with the same billboards displayed, they can demonstrate that this photo wasn’t taken in 2020.

Comparing a user-contributed photo on Google Maps that is time-stamped October 2016 (top) with the Facebook post falsely claiming that the tank photo was taken in 2020 (bottom) offers further proof that the photo is not a current one. Click the image to view a larger version.

COVID-19 video taken out of context

This screenshot of a video circulating on social media claims to show body bags piling up in a New York City hospital. Is this claim true?”

NO: The video in this tweet was not shot at a hospital in New York City. YES: It was captured at the Hospital General del Norte de Guayaquil IESS Los Ceibos in Guayaquil, Ecuador. YES: The same video clip has previously been taken out of context to make false claims about conditions in hospitals in Madrid and Barcelona, Spain.

Note: The fact check linked above, published by the Spanish fact-checking organization Maldita.es, is a good example of using digital forensics tools and methods to verify the location and other details of a piece of content. This tweet thread by Mark Snoeck, an open source investigator, provides additional evidence that the video was shot at IESS Los Ceibos by comparing interior photos of the hospital (from Google Maps and Flickr) with stills from the video:

An image from Snoeck’s tweet thread compares two stills from the viral video (far right, top and bottom) with a photo of the interior of the Hospital General del Norte de Guayaquil IESS Los Ceibos in Guayaquil, Ecuador, to match details such as signage, electrical outlets, trim and door. Maldita.es used similar methods in its fact check.

Netanyahu shares Hallmark Channel clip

Israeli Prime Minister Benjamin Netanyahu sent several members of his cabinet a video clip of soldiers dumping bodies in landfills, claiming it was proof that Iran was concealing the number of COVID-19 deaths, Axios reported on April 1. According to the item by Barak Ravid, a diplomatic correspondent for Israel’s Channel 13 News who also writes for Axios, the video had been circulating in Iran and was sent to Netanyahu by his national security advisor, Meir Ben-Shabbat. Netanyahu talked about it during a conference call with his cabinet on March 31, and several people asked to see it, Ravid wrote. The video was actually a clip from a 2007 Hallmark Channel mini-series, “Pandemic.” The prime minister’s office told Ravid that the cabinet members who received the video were told that its authenticity had not been confirmed.

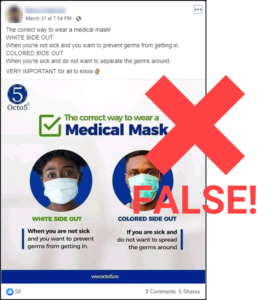

False and dangerous mask-wearing advice

Beware social media posts from unverified accounts providing health advice. This post office guidance on two ways to wear medical masks, depending on whether you are sick or not. Should you trust this advice?

NO: Medical masks are not intended to be worn two different ways — one if you’re sick (and trying to avoid infecting others) and the other if you’re not sick (and trying to avoid being infected). YES: The white side is absorbent; it should always be worn next to your mouth to stop droplets from passing through the mask and into the air. YES: The colored side faces out. YES: A number of graphics circulating on social media make this same false claim.

Note: This is a good example of how serious misinformation can be.

Misleading video, false cures swirl around COVID-19

NO: The man in the lead photo on this story from the “satirical news” website Viral Cocaine did not “drop dead” on the street in New York City’s borough of Queens. YES: The photos show a man who appears to have collapsed on the sidewalk in Flushing, Queens, on March 3. YES: He was wearing a surgical mask. NO: The incident was not related to COVID-19.

Note: Photos and videos of people who are unwell in public, along with speculation about COVID-19 as the cause, will almost certainly continue to circulate on social media. For example, a man passed out on a train platform in Brussels prompted several bystanders to shoot video of the incident and to speculate that the new strain of coronavirus was the cause.

False risks, false cures

NO: COVID-19 does not cause pulmonary fibrosis (scarring of the lungs). NO: Holding your breath for 10 seconds is not a reliable test for pulmonary fibrosis — or for COVID-19. YES: Drinking water is generally good for you, and proper hydration is important during treatment for any infection. NO: Frequently drinking water does not prevent infection from the current strain of coronavirus by washing the virus into your stomach.

Note: Like many viral rumors, this one includes a request to “send and share” this falsehood to “family, friends and everyone.” You should be skeptical of user-generated material that cites sources that are anonymous or unfamiliar, especially if it explicitly asks you to share it widely.

Note: Like many viral rumors, this one includes a request to “send and share” this falsehood to “family, friends and everyone.” You should be skeptical of user-generated material that cites sources that are anonymous or unfamiliar, especially if it explicitly asks you to share it widely.

Also note: There are numerous “copy-and-paste” style viral rumors — many of them citing second- or third-hand advice from an authoritative source — circulating via social media, email and text message about the virus.

Also note: There are at least a dozen iterations of this “advice” circulating online, including one that says it is from an “internal message” to the “Stanford Hospital Board.” In a post on March 11, Stanford Health Care debunked this (scroll to the bottom of the webpage).

Misinformation creates coronavirus ‘infodemic’

Here are just two recent examples of the coronavirus ‘infodemic’ related to the outbreak:

Panic buying not staged by media

NO: A mainstream media (MSM) news outlet did not stage evidence of COVID-19 panic by removing goods from shelves in a grocery store. YES: The Romanian TV news program Observator did use video of empty store shelves and coolers in a report on Feb. 26 about people buying large quantities of specific items to prepare for a possible outbreak of the disease. These include flour, canned goods and water. YES: The photo in the post above was taken during a live report broadcast the next day. The news channel whose reporter appears in the photo above has debunked this claim.

Escape from quarantine post a hoax

NO: A man in his early 20s did not escape from a mandatory COVID-19 quarantine on a U.S. military base. NO: The Centers for Disease Control and Prevention (CDC) did not run this Facebook ad about this nonexistent “escapee.” YES: This ad (note the word “Sponsored”) was purchased by an imposter page named “Covid19” and using the CDC logo.

WHO describes a coronavirus ‘infodemic’

There is far more misinformation about COVID-19 and the strain of coronavirus that causes it than we can adequately address here. For additional coverage of what the World Health Organization has called an “infodemic,” see:

- “Here’s A Running List Of The Latest Disinformation Spreading About The Coronavirus” (Jane Lytvynenko, BuzzFeed News).

- “Surge of Virus Misinformation Stumps Facebook and Twitter” (Sheera Frenkel, Davey Alba and Raymond Zhong, The New York Times).

- “In Facebook groups, coronavirus misinformation thrives despite broader crackdown” (Brandy Zadrozny, NBC News).

Questions for discussion in the classroom

Why do you think rumors like this have appeal? What journalism standards and ethic policies address the authenticity of photos and video footage?

Virus not causing beer drinkers to avoid Corona brand

NO: More than a third of Americans are not avoiding Corona beer because of the current outbreak of COVID-19, the disease caused by a newly identified strain of coronavirus.

YES: A telephone survey of 737 American beer drinkers conducted on Feb. 25 and 26 by 5W Public Relations, a New York City agency, found that 38% of those surveyed (or 280 respondents) “would not buy Corona under any circumstances now.”

NO: A Feb. 27 press release publicizing the survey contains no evidence that the COVID-19 outbreak is the reason that any of those 280 people would not buy Corona beer right now.

YES: The press release also cites an “uptick in searches for ‘corona beer virus’ and ‘beer coronavirus’ over the past few weeks.”

YES: There is a genre of humorous memes that make puns about the virus and beer. Some joke that the beer is an antidote to the virus.

Related:

- “What the Dubious Corona Poll Reveals” (Yascha Mounk, The Atlantic).

- “Corona claps back at claims coronavirus scare will hurt sales” (Josh Noel, Chicago Tribune).

Coronavirus hoaxes continue to spread

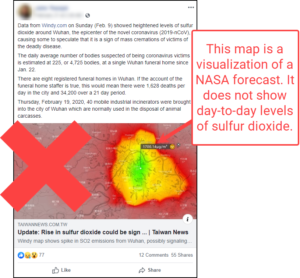

NO: This map does not show day-to-day levels of sulfur dioxide (a toxic gas) in Wuhan, China. YES: It is a visualization of a NASA estimate based on past data created by Windy.com, a company that provides interactive weather forecasting services. NO: This is not evidence that mass cremations are being carried out in Wuhan.

NO: You cannot catch the new strain of coronavirus (COVID-19) from the air in bubble wrap.

Note: This rumor is surprisingly widespread on Twitter.

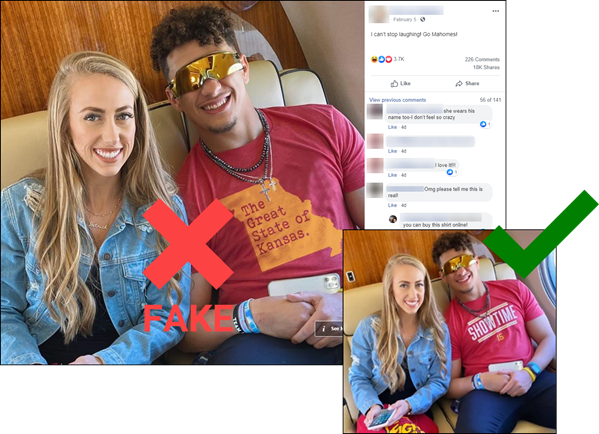

Patrick Mahomes’ doctored shirt

A social media post showed Kansas City Chiefs quarterback Patrick Mahomes wearing a shirt with doctored text. The doctored image included the words “The Great State of Kansas” printed on an outline of the state of Missouri.

A social media post showed Kansas City Chiefs quarterback Patrick Mahomes wearing a shirt with doctored text. The doctored image included the words “The Great State of Kansas” printed on an outline of the state of Missouri.

What he actually wore

Mahomes wore a shirt that said “SHOWTIME” with his jersey number (15) in the original photo. His agent tweeted the photo after the Kansas City Chiefs beat the San Francisco 49ers in the Super Bowl on Sunday, Feb. 2.

Prompted by Trump tweet

President Donald Trump made an error in a tweet after the Super Bowl on Feb. 2, saying that the champion Kansas City Chiefs “represented the Great State of Kansas…so very well.” Trump deleted the tweet and corrected the mistake in a second tweet less than 12 minutes later.

Take note

The websites Trump Twitter Archive and Factba.se archive Trump’s tweets, including those that have been deleted.

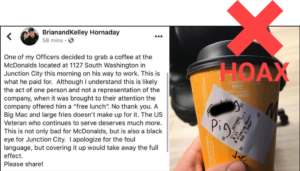

Coffee order did not come with a slur

NO: A McDonald’s employee in Junction City, Kansas, did not write “f*cking pig” on a police officer’s coffee cup. YES: A 23-year-old officer with the Herington Police Department would later say he wrote the expletive on his own coffee cup as a “joke.”

YES: The department’s police chief believed the officer’s initial claim that a McDonald’s employee was responsible for the slur and posted about the incident on Facebook, drawing national attention and news coverage..” YES: The officer resigned on Dec. 30 after McDonald’s reviewed video footage of the order being served, which showed that no restaurant employee wrote on the officer’s cup.

Oklahoma incident

A police officer did receive a coffee with “pig” on the order label at a Starbucks in Kiefer, Oklahoma, in November.

In need of correction?

The New York Post, which is one of several news outlets that published reports about the staged incident, has published follow-up articles, but has yet to publish an editor’s note or other correction on its original story.

This is not Greta Thunberg firing a rifle

NO: The person shooting a rifle in this video is not 16-year-old climate activist Greta Thunberg. YES: She is Emmy Slinge, a 31-year-old engineer in Sweden (the page is in Swedish.)

NOTE: If you use Google’s Chrome browser, Google will offer to translate pages written in languages other than English You can also use translate.google.com.

ALSO NOTE: The claim that the video showed Thunberg shooting the rifle was an especially popular meme in Brazil, and Comprova, a fact-checking initiative supported by four news organizations there, was the first to debunk it (the page is in Portuguese). A reporter from Estadão, one of the news outlets involved with Comprova, contacted Slinge to confirm that she was the person in the video.

Resource: Comprova used the InVid fake news debunker browser extension to find the source video.

Satirical piece makes the rounds on clickbait sites

A “satirical” story falsely claimed that two children of U.S. Rep. Ilhan Omar, a Minnesota Democrat, were arrested and charged with arson for a nonexistent fire at the nonexistent St. Christopher’s Church of Allod in Maine.

A “satirical” story falsely claimed that two children of U.S. Rep. Ilhan Omar, a Minnesota Democrat, were arrested and charged with arson for a nonexistent fire at the nonexistent St. Christopher’s Church of Allod in Maine.

Bustatroll.org, one of a network of satire sites run by the self-proclaimed “liberal troll” Christopher Blair, originally published this piece in July. Blair frequently provokes incautious readers online. In this post, he uses the word “Allod” in the name of the non-existent church. “Allod” is an acronym for “America’s Last Line of Defense,” another of Blair’s sites.

The item about Omar’s children has been copied (and in some cases plagiarized) by a number of clickbait sites seeking ad revenue. Links to the stories continue to be shared online, and many of those sites do not label the item as satire.

For teachers

Blair publishes absurd falsehoods, which he prominently labels as satire, but does so to troll and mock conservatives. Is this a legitimate form of “satire”? Why or why not?

If Blair’s obviously labeled pieces get mistaken as actual news, who is at fault? Is it unethical for Blair to profit from his satire through ad placements on his websites? Is it unethical for digital ad brokers to place ads on Blair’s sites? On sites that plagiarize Blair’s work?

Brexit video edit changed its meaning

Partisans in the United States certainly have no monopoly on editing or manipulating videos and other content to make their political opponents look bad. Take this recent example from the United Kingdom.

In an appearance Nov. 5 on Good Morning Britain, Keir Starmer, a member of Parliament and the Labour Party’s shadow Secretary of State for Exiting the European Union, did not refuse to answer, or fail to answer, a question about his party’s position on Brexit — the process by which the nation will leave the European Union (“Britain” + “exit” = “Brexit”). It was approved by British voters in 2016.

Just kidding?

The official Facebook page for the Conservative Party, which currently holds power, posted an edited version of the interview that made Starmer appear to be stumped by a question from Good Morning Britain host Piers Morgan. The full footage of the interview, available on the program’s YouTube channel, shows that Starmer promptly answered Morgan’s question.

The day after the Conservative Party posted its video on Facebook, the party’s chairman, James Cleverly, in three separate interviews described the doctored video as “humorous,” “light-hearted” and “satirical.”

For discussion in the classroom:

- Should Facebook flag this post for its fact-checking partners?

- Should it demote it in the platform’s algorithm to stop it from spreading widely?

- Should political parties be held to the same community standards on social media as other users?

- Is it important for people to be able to see examples of a political figure or party engaging in misleading spin or fabrications?

- Do you think tactics like this ultimately help or hurt the politicians and political parties that use them?

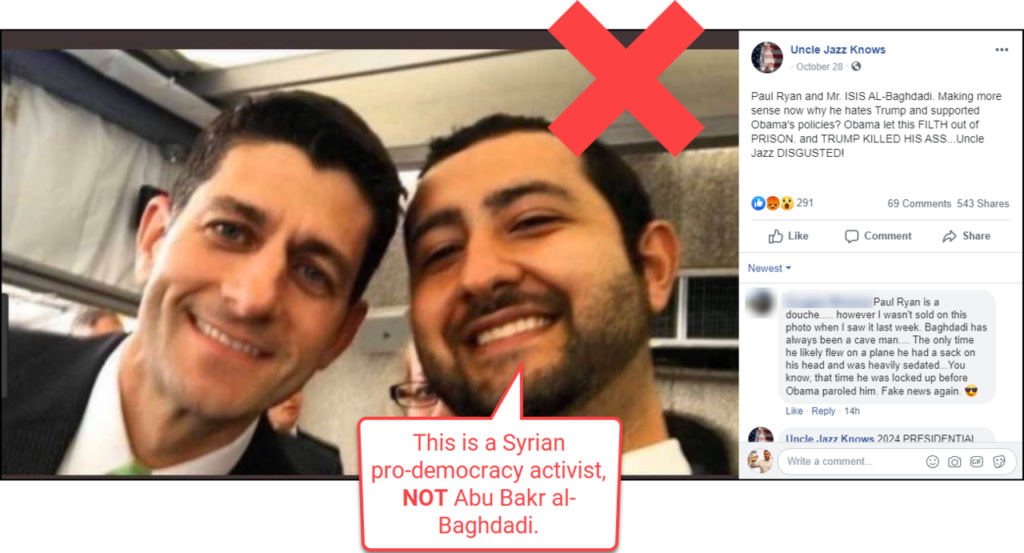

False news around al-Baghdadi’s death

This week in The Sift® we examine several viral rumors related to the killing of Abu Bakr al-Baghdadi, the founder and leader of the Islamic State terrorist organization. Here’s our rundown:

Rep. Ilhan Omar, a Minnesota Democrat, did not cry, praise or offer condolences to the family of al-Baghdadi after he killed himself on Oct. 27 during a U.S. military raid. A “satirical news” website — Genesius Times — published a fictional piece last week based on this claim. The photo of Omar used in the Genesius Times item (and in the Facebook post above) is from a news conference in April.

Note: While the Genesius Times’ tagline provides a prominent disclaimer in its header about the site’s lack of credibility (“The most reliable source of fake news on the planet”), the comments about this item on social media and on the website suggest that a significant number of people believed it.

Also note: The Genesius Times website contains ads placed by RevContent, a digital ad broker, and claims to be a participant in an affiliate advertising program run by Amazon.

Discuss: Does this website count as satire? Is its style of satire ethical?

The man on the right is not Abu Bakr al-Baghdadi. He is Mouaz Moustafa, the executive director of the Syrian Emergency Task Force, a nonprofit organization that advocates for democracy in Syria. Moustafa posted this selfie to his Instagram account in May 2016 after meeting with then-House Speaker Paul Ryan to discuss the situation of civilians in Syria.

CNN did not describe Abu Bakr al-Baghdadi as an “unarmed father of three” on a chyron during a news broadcast. CNN did not refer to the terrorist leader as a “brave ISIS freedom fighter” on screen during a broadcast. The image of CNN anchor Don Lemon in this fake screenshot is not from October 2019. It has been around since at least December 2018.

Note: As the fact check from Snopes points out, this fake screenshot appears to be an allusion to a headline on The Washington Post’s website that referred to Baghdadi as an “austere religious scholar,” prompting sharp criticism.

Discuss: Do you think that this fake screenshot was created as a joke? Is there evidence that it tricked people who saw it online? Why do people manipulate screenshots of newscasts? Once a fake screenshot is created, can it be controlled online? What direct or indirect effects might this fake screenshot have when someone mistakes it as authentic?

Doctored images designed to provoke

Every day doctored images pop up on social media — and often go viral thanks to their provocative content. Take these two examples from the past week:

Every day doctored images pop up on social media — and often go viral thanks to their provocative content. Take these two examples from the past week:

This image of Donald Trump with his parents, Mary and Fred, is not authentic. The Ku Klux Klan robes were added to a photo that was taken in 1994.

Faked face covering

People are not allowed to cover their faces for a driver’s license photo in Ontario, Canada. The photo seen in the Facebook post — of a woman wearing a face veil — was added to an image of a sample Ontario driver’s license. This rumor isn’t new; it was debunked by Snopes in 2015.

People are not allowed to cover their faces for a driver’s license photo in Ontario, Canada. The photo seen in the Facebook post — of a woman wearing a face veil — was added to an image of a sample Ontario driver’s license. This rumor isn’t new; it was debunked by Snopes in 2015.

The same doctored image also appeared in 2017 in a false report by a Russian radio station that claimed it was from Brazil.

For teachers

In the first photo (Trump and his parents), what emotions does this claim elicit? How can emotions override rational responses to information?

In the case of the doctored driver’s license photo, why might this false image have recirculated in October 2019? What was happening in Canada at that time? Do you think that it will circulate again? Why or why not?

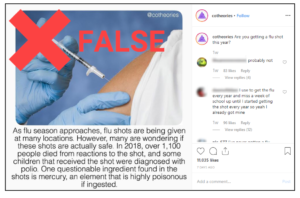

Inoculate yourself against flu shot rumors

Are you ready for flu season? Maybe you’ve stocked up on hand sanitizer, tissues and ibuprofen. And if you’ve already rolled up your sleeve to get a flu shot, then consider yourself well prepared.

But if you have concerns about the vaccine, it’s time to arm yourself against the viral rumors about it and learn the truth about protecting your health.

So don’t let this multi-tentacled rumor (pictured above) scare you. Its claim that “over 1,100 people died from reactions to the shot” in 2018 is utterly untrue. (In extremely rare cases, people can experience serious allergic reactions to ingredients in the vaccine.)

It also claims that some children who received flu shots have contracted polio — a once-common disease with often debilitating outcomes. The flu vaccine has nothing to do with polio, and the poliovirus is not an ingredient in the vaccine. No children have ever gotten polio from a flu shot.

Finally, that post claims that mercury is a “questionable ingredient” in the vaccine. Vaccines in a multi-dose vial may contain the preservative thimerosal, which has a trace amount of mercury. However, thimerosal is not poisonous and has been used safely in vaccines since the 1930s. There is no thimerosal in the single-dose syringes and nasal sprays often used to administer the vaccine.

Another rumor about this season’s flu vaccine falsely claims that getting a flu shot makes you “an active live walking virus.” It doesn’t. A French parody website, SecretNews, originated this fabrication in August.

Remember, just like the flu itself, false viral rumors about the flu vaccine are a perennial presence. But if you call on your news literacy skills, you can get to the truth and protect your — and your family’s — health.

When satire causes confusion

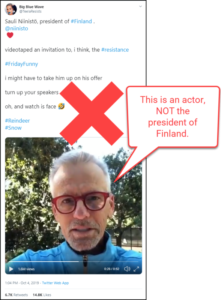

It is true that Sauli Niinistö, the president of Finland, met with President Donald Trump on Oct. 2 at the White House. But Niinistö did not later post a video in which he said he prefers “the company of reindeer and snow.” The video, a piece of satire, features Actor Rob Paulsen, who created the video and shared it to his Instagram account.

It is true that Sauli Niinistö, the president of Finland, met with President Donald Trump on Oct. 2 at the White House. But Niinistö did not later post a video in which he said he prefers “the company of reindeer and snow.” The video, a piece of satire, features Actor Rob Paulsen, who created the video and shared it to his Instagram account.

Satire out of context

This is an example of a piece of satire that has circulated outside its original satirical context, causing confusion.

For teachers

Should Paulsen have labeled this video more clearly as a piece of satire? Is there anything he could have done to prevent it from being misunderstood if it was copied and used elsewhere online? Is it reasonable to expect the creators of satire to take steps to prevent such confusion, or is that the sole responsibility of the audience? Even if you always recognize satire, can other people mistaking a piece of satire for something real have an impact on you?

Fact-check this tweet

President Donald Trump visited a newly replaced section of the Otay Mesa border wall near San Diego on Sept. 18 and praised its “anti-climb” features. The image shown here implies those features do not work. But it requires a closer look.

The border wall shown in this tweet is not the Otay Mesa wall. It is a section of border fencing in Imperial Beach’s Border Field State Park, about 10 miles to the west of Otay Mesa. The image does not show people crossing into the U.S. It does show recently arrived members of a caravan of migrants who climbed and sat atop the fence before returning to the Mexican side of the border in November 2018.

For teachers

Use this viral rumor to teach students how to do a reverse image search and use Google Street View. Using Google’s Chrome browser, right-click the image in this archived version of the tweet and select “Search Google for Image” from the menu. Use the image search results to find a credible source and debunk the tweet’s claim. Then challenge students to locate that section of fence using Google Street View.

Border wall timeline

Show students how to use the Google Street View timeline to see when the section of fence in the photo might have been constructed. Ask them if it appears to have been updated or otherwise changed any time in the last few years? (Hint: The lighter colored section of fence appears in the December 2015 capture of the fence, but was not there in April 2009.)

Don’t let a viral meme infect you

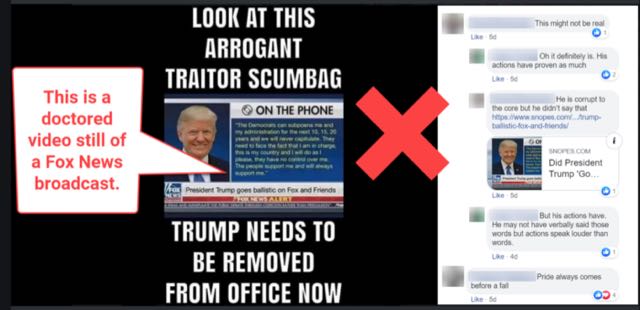

Did you see the viral meme featuring President Donald Trump that circulated last week? It combined an old image from a Fox News program, a doctored caption and a false quote.

Did you see the viral meme featuring President Donald Trump that circulated last week? It combined an old image from a Fox News program, a doctored caption and a false quote.

The president did not say the following during a phone interview on Fox and Friends:

“The Democrats can subpoena me and my administration for the next 10, 15, 20 years and we will never capitulate. They need to face the fact that I am in charge, this is my country and I will do as I please, they have no control over me. The people support me and will always support me.”

The caption “President Trump goes ballistic on Fox and Friends” never appeared on screen. Instead, a video still from an April 25 phone interview with Trump on Hannity, another Fox News program, was manipulated to add the false quote and text.

The comments on one instance of this false meme on Facebook (archived here) show not only that a number of people believe that the quote and the image are authentic, but also how people sometimes rationalize false information as “true.”

Remember, purveyors of misinformation cross all demographics and partisan identities. So if your finger is itching to “like” or share a post that confirms your closely held beliefs, take a step back. Look deeper to identify the source of the content and determine its credibility before you jump on a viral bandwagon and get taken for a ride

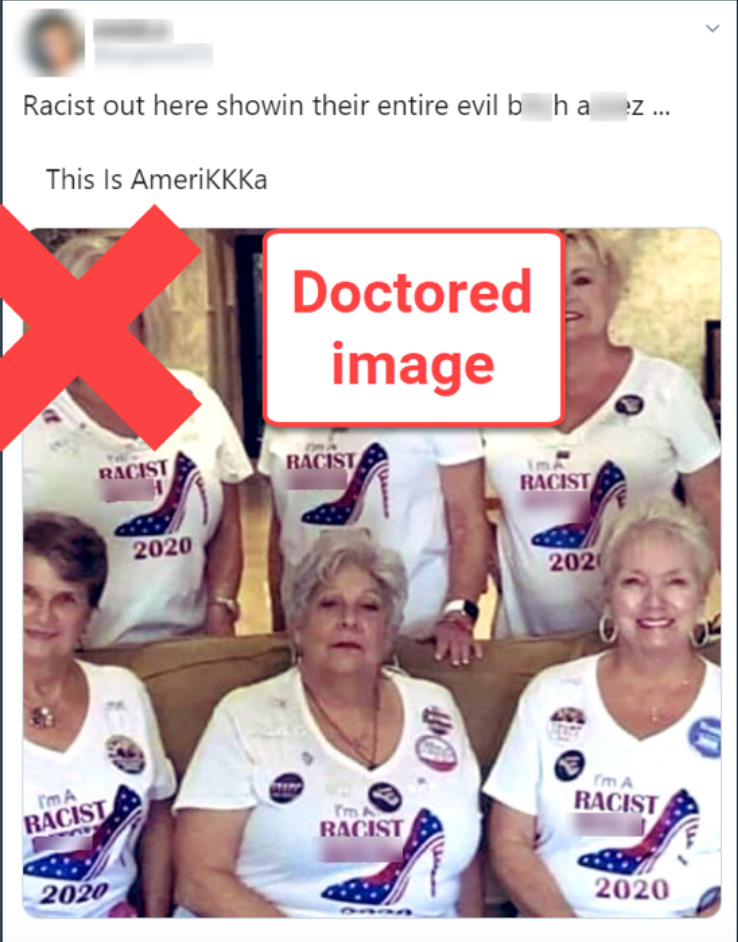

Doctored images can fool and mislead us

In this age of digital manipulation, you can’t always believe your eyes. The image above is a good example. It circulated on Twitter in mid-September 2019. The image shows a group of women wearing T-shirts with the words “I’m a racist [expletive] 2020.”

Uh, not quite: The women were actually wearing T-shirts with the words “I’m a Trump girl 2020.” The original photo was shared on social media following President Donald Trump’s rally in Greenville, North Carolina, in July.

The words on the T-shirts were digitally altered, and the false image was posted to Twitter. (You can see the doctored image and the original here. You can view a larger version of this image, which includes the profanity, here.)

Messing with messages

This is just one example of a frequent misinformation tactic. It is extremely common for photo manipulators to target T-shirt messages. Before you like or share an image, confirm its veracity by doing a reverse image search using tools from Google and TinEye. You can also use Snopes or other fact-checking sites.

This example also illustrates how misinformation that targets strong emotions and controversies can cause people to accept — or even rationalize — their initial perceptions when a claim upholds a personal conviction or accepted truth.

If you discover content that has been manipulated or falsified, be sure to call it out as misinformation and prevent others from being misled.

‘Artistic license’ or misinformation?

It would be a dull world if creative types weren’t allowed artistic license — the leeway to interpret something without being strictly factual.

However, if someone wants to make a point about a real issue but plays fast and loose with the facts, does artistic license become misinformation?

Two recent examples raise that question.

The Post parody

The activist artist troupe the Yes Men produced a fake issue of The Washington Post with a front page story declaring that President Donald Trump had resigned and made a hasty retreat from the White House. The realistic-looking “newspaper,” with a publication date of May 1, 2019, was handed out at a number of locations around Washington, including in front of the White House, on Jan. 16.

Hey @washingtonpost: Someone is passing out fake copies of the paper (top)? For comparison, a real issue of the Post below. pic.twitter.com/j7svhBDfJF

— Adrienne Shih👩🏻💻 (@adrienneshih) January 16, 2019

In a world where “fake news,” altered images and viral rumors are the order of the day, the fake issue of the Post was presented as political satire, a way to capture attention about real issues in a way that, after a moment or two, leaves no doubt that it is a parody. But does it count as “fake news”?

While the fake Post was widely criticized (including by the Post itself), its defenders saw the Yes Men’s work as clearly a parody: “It’s not intended to deceive people forever or to inspire violence,” April Glaser wrote in Slate. “It’s intended to force someone who sees it to do a double take and consider what it would mean if this headline were an account of actual political events. It’s satire and exaggeration.”

Photo fakery

Meanwhile, the #10yearschallenge meme was sweeping social media, with people posting photos of themselves 10 years ago next to current images. Environmentalists saw this meme as an opportunity to starkly illustrate the effects of climate change.

But some of those side-by-side images were faked — including this tweet from Jan. 14, which garnered nearly half a million “likes” and was shared nearly 370,000 times over a couple of days:

It claims to show how much one glacier had melted over the last decade. It doesn’t.

The photos are literally from opposite ends of the Earth and were taken only two years apart. The NASA photo (left) is from Antarctica in 2016. The National Snow and Ice Data Center image (right) is from the Arctic in 2018.

Nicolas Bilodeau, who created the tweet, told AFP Fact Check that he was looking for images that would provoke a reaction around global warming. Finding photos of the same location to illustrate this proved “too complicated,” he said, so he used images that he thought would convey his point. He justified his decision as “artistic license.”

What’s the difference?

In the case of the fake newspaper, the future date of the issue is an immediate tipoff that something is awry. And handing out printed copies (there was an accompanying website that garnered less attention) removed the knee-jerk, viral sharing aspect that makes misinformation so potent.

The faked photo collage, however, does not depict what it claims to show. Based on the number of retweets and “likes,” the tweet misled many. While the deceit itself causes damage, it also leaves the public more cynical about information they see online and makes it more difficult for people to know what to believe about key issues.

And that’s not artistic license. That’s misinformation.

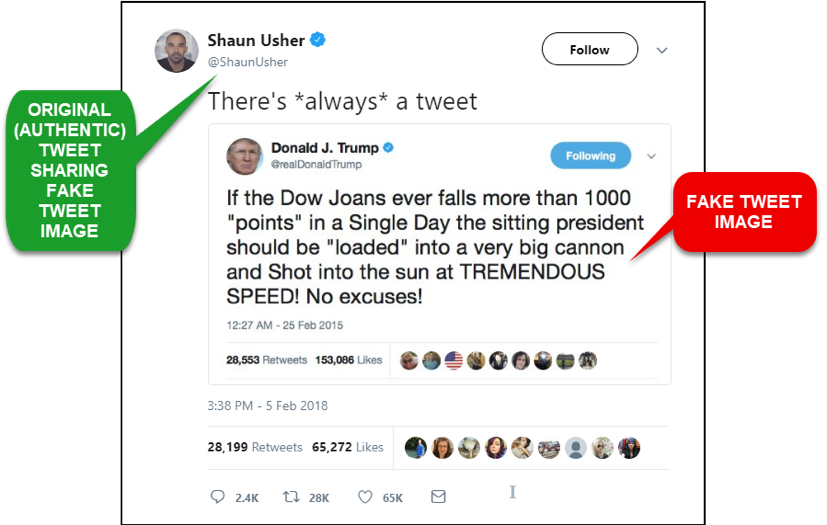

A fake Trump tweet goes out of control

President Donald Trump’s Twitter activity gets a lot of attention — some of it due not to his actual tweets, but to fake tweets attributed to him that are created and shared online.

He’s not alone; fake tweets are published in the names of many public figures. However, thanks to both Trump’s outsize personality and his current position, his tweeting does generate a context that is uniquely suited for false tweets to go viral: He is polarizing, so people tend to react to him with a high degree of emotion; his authentic tweets are often surprising and unorthodox; and he has been extremely active on Twitter (more than 37,000 tweets) since joining the platform in March 2009.

Insight: Misinformation generally prompts a strong emotional reaction that bypasses our rational minds by igniting our biases.

Thus, when a new, unconventional tweet attributed to Trump surfaces in the online chatter of the day, it is often assumed to be authentic. His critics are simultaneously outraged and unsurprised; their emotions and confirmation bias kick in; and they comment and share without stopping to confirm whether the tweet is legitimate.

Add to this the fact that convincing fake tweets are incredibly easy to produce — using any number of online generators that convert text into an authentic-looking image of a tweet — and you have a perfect storm for viral misinformation.

The ‘Dow Joans’ joke

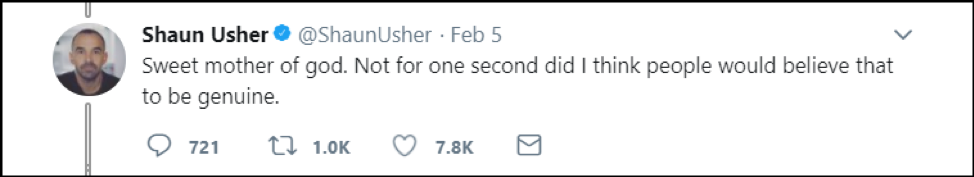

This fake Trump tweet — which includes a publication date of Feb. 25, 2015, more than three months before Trump declared his candidacy for the White House — actually circulated on Feb. 5, 2018, the day the Dow Jones index dropped 1,175 points.* It was created by Shaun Usher, a British author, blogger and curator of historic letters. He claims to have shared it as a joke — a parody of the many instances when Trump’s pre-presidential tweets have clashed with some aspect of his presidency.

Shortly after sharing the fake “Dow Joans” tweet, Usher expressed surprise that people were taking it as genuine:

Key term: Poe’s Law is a maxim of internet culture that says an unlabeled satirical comment or parody post can be easily mistaken for a sincere, legitimate comment or for a post by a troll or extremist.

Insight: A significant number of viral rumors begin as jokes or as unlabeled satire.

Moments later, Usher went on to explain the speed with which the fake tweet went viral and the reason he decided not to delete his original tweet, to which the fake one was attached: to preserve “its place of birth,” presumably so people who encounter it could find its origins if they looked.

Within a matter of hours, several fact-checkers had contacted Usher to confirm the origins of the tweet. Those debunks made the truth behind this rumor easy to find. So do archives of Trump’s tweets — including trumptwitterarchive.com, which lists all of his tweets from the time he joined the platform, and “All the President’s Tweets” from CNN.

Insight: A fake tweet is commonly accompanied by the (false) assertion that it was deleted in an attempt to (falsely) explain why it’s being shared as an image and why it doesn’t appear in the alleged author’s actual Twitter feed. This is a red flag.

Tool: Politwoops is a database of actual tweets that were posted, then deleted, by public officials.

Still, don’t be surprised if the “Dow Joans” tweet recirculates if the Dow Jones suffers another significant drop while Trump is in office.

What can we learn?

A significant number of viral rumors begin as jokes — as pieces of satire created and shared by individuals who mistakenly believe that the humor is obvious enough to not need labeling. This highlights a few important points that explain why misinformation goes viral so quickly.

First, misinformation engages our biases by igniting our emotions. All humans have an innate tendency to seek confirmation of their beliefs — to “lean in to,” or underscrutinize, information that confirms them and to “lean away from,” or seek reasons to dismiss, information that complicates them.

Key term: Confirmation bias is the innate tendency for our existing beliefs to interfere in our evaluation of information and evidence, causing us to seek justification for dismissing or accepting details in ways that validate our current viewpoint.

(To see this in action, take a look at the replies that were made to Shaun Usher’s original tweet along the lines of “I wish” or “This (sadly) is fake.”)

Second, confirmation bias can interfere with recognition of satirical posts, especially when those posts aren’t labeled as satire. It can also encourage people to like and share misinformation too quickly, without stopping to verify it — a perfect recipe for virality.

Finally, as Usher himself quickly realized, once a piece of misinformation is on the open web, it’s all but impossible to control. Even satire that is clearly labeled, or is published by a well-known source of satirical content, can be easily shared outside of that satirical context and mistaken for a genuine statement. For instance, someone could copy the misinformation — in this case Usher’s fake tweet image — and share it on Twitter, where it is likely to go viral again outside its original context:

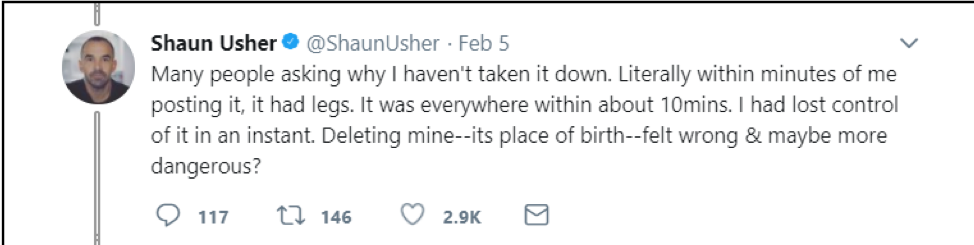

Or the misinformation gets copied and shared on another platform, like Usher’s fake tweet did in an anti-Trump community on Reddit:

Insight: Viral misinformation usually quickly goes “cross-platform,” meaning that it’s shared on other social media platforms, on social sharing sites like Reddit, on partisan chat boards or in closed social media groups (where a lot of partisan misinformation is shared as ideological “ammunition”).

Note: Russian government propaganda agents frequently “seeded” misinformation in such groups.

Once a piece of misinformation goes cross-platform, it becomes effectively impossible to track and correct. But flagging unlabeled satirical posts and other forms of misinformation as “fake” in replies and comments to those who (inadvertently or otherwise) share them can help reduce their spread and impact.

*An earlier version of this post said that Usher posted his tweet and the image of the fake Trump tweet “after the Dow Jones index dropped sharply in late March 2018.” Since Usher’s tweet was dated Feb. 5 and time travel has not yet been invented, that would have been impossible. We regret the error. (July 6, 2018)

Denzel Washington supports Trump (FALSE)

This meme — the notion that in 2016 actor Denzel Washington publicly supported then-presidential candidate Donald Trump — is an example of a false claim that has evolved over time as it has spread across social media and on a variety of unreliable websites. Other iterations of this rumor falsely quoted Washington as saying that when Hillary Clinton lost the 2016 presidential election, “we avoided a war with Russia, and we avoided the creation of an Orwellian police state.”

This rumor first went viral in the final weeks of the 2016 presidential campaign. It resurfaced in February 2018 when Washington was nominated for an Oscar — and appeared yet again in April 2018, after musician Kanye West tweeted a statement of support for Trump, reigniting longstanding discussions about the political loyalties of black American voters.

Insight: Viral rumors often recirculate when new contexts for them emerge.

What can we learn?

Let’s start with an instance of this viral meme that was included in a post on a highly suspect website — USALibertyPress.com — in February 2018:

Note: The link in the previous paragraph is to an archive of the “fake news” website. That avoids linking to the site itself and giving it additional web traffic and ad revenue.

The first step we should take is to do a little lateral reading* on the claim itself. So let’s do a quick search:

All the top results for this search are from fact-checking organizations, so we can safely assume that this is a questionable claim. A quick read of the fact checks confirms that the claims in both the headline and the meme on the Liberty Press website are false. PolitiFact traced the headline claim to a well-known “fake news” website, YourNewsWire.com, and Snopes shows that the quote attributed to Washington was actually said by Charles Evers, the brother of slain civil rights leader Medgar Evers, in an interview with the New York Post.

Note: Like pieces of quality journalism, good fact checks and debunking articles are transparent. They don’t ask you to trust them; they show you why you should trust them.

But this still leaves us with questions about the source of the information. The source we started with is USALibertyPress.com, so let’s begin there.

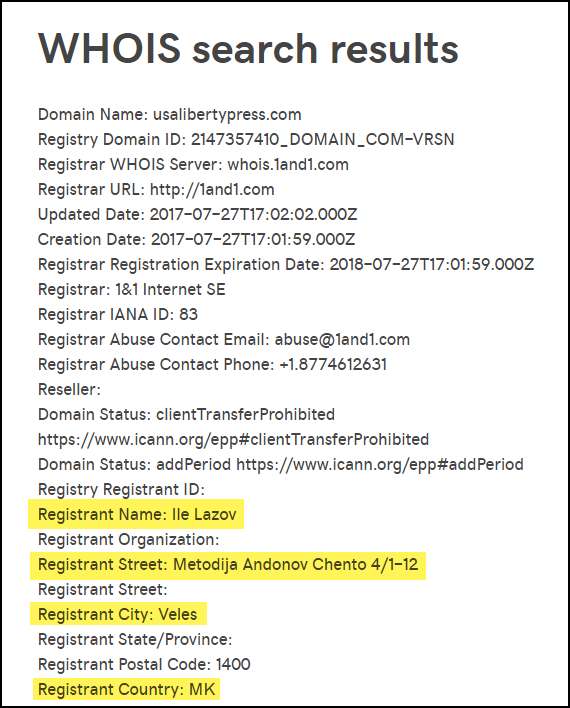

Since the site does not have an “About” section — a red flag in itself — we can try to see who might have established it by searching the WHOIS registry, a database of website registration information.

Tool: WHOIS is a database of website domain registration information that you can search using any number of free portals, such as whois.net and GoDaddy’s WHOIS search portal.

A quick search for “USALibertyPress.com” on GoDaddy’s WHOIS search portal gave us this**:

As the highlighted areas show, this site was registered by someone at an address in Veles, Macedonia — a hub for “fake news” purveyors. A quick search for Veles confirms its infamy:

We now have all we need to confirm that not only is this a false claim, but at least one website pushing it is part of a network of “fake news” sites based in Macedonia.

Finally, you could use Google Street View to see the physical address in Veles that was used to register this site:

There is quite a bit more you could do with this example — see this tweet thread for more ideas — but these three steps (reading laterally for additional information, searching the WHOIS registry for a website’s origin and using Google Street View to check out the location) are important skills that will help you sort fact from fiction in the future.

*The lateral reading concept and the term itself developed from research conducted by the Stanford History Education Group (SHEG), led by Sam Wineburg, founder and executive director of SHEG.

**UPDATE (Aug. 28, 2018): The domain registration for www.usalibertypress.com was updated on July 28, 2018, and no longer includes information about the registrant in Macedonia. The site itself is now offline.