Track the trends: Election integrity targeted by online disinformation

The News Literacy Project is tracking presidential election misinformation trends online. As voting day nears, let’s examine some of them, so we can make sure to cast our ballots based on facts, not falsehoods.

A batch of mail-in ballots cast for former President Donald Trump was not destroyed in Bucks County, Pennsylvania; a vacant address in Erie, Pennsylvania is not being used to register illegal voters; and voting machines are not systematically flipping votes in Georgia. The final days of the 2024 presidential election are motivating a spike in falsehoods aimed at undermining confidence in the electoral process in the United States.

Noncitizens Voting

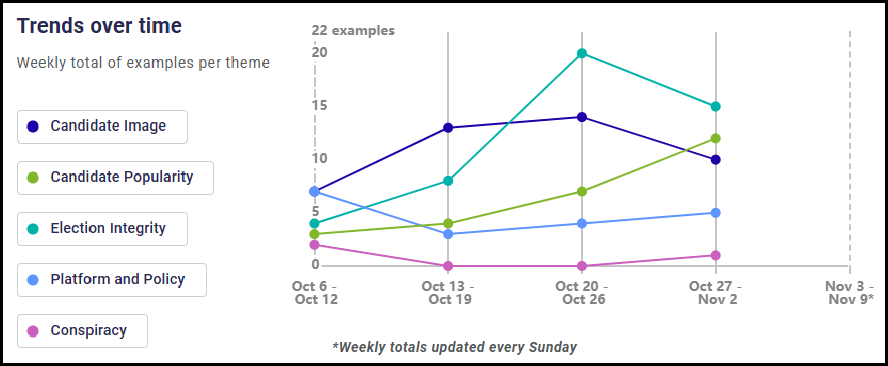

Falsehoods targeting the integrity of elections make up about 15% of all rumors in the News Literacy Project’s Misinformation Dashboard: Election 2024. These false claims have pushed narratives related to election interference, voter interference, and candidate eligibility, and the most common rumor is that noncitizens are illegally casting votes. Yet, as elections experts have repeatedly pointed out — noncitizens aren’t eligible to vote and instances of noncitizens casting a ballot are extraordinarily rare.

Election Night

Another target of election misinformation is the vote counting process itself. While falsehoods misrepresenting how ballots are being tallied already are frequent, this misinformation theme is likely to expand on election night as votes are counted. Here are a few disinformation trends to expect on Nov. 5:

- Surges in votes being misconstrued as fraud. Remember, counties around the country report their totals in batches, not by individual ballots, so it’s normal to see vote totals jump, especially as larger urban counties report their numbers.

- Premature declarations of victory. Some states have laws prohibiting processing mail or absentee ballots until Election Day, making it difficult to announce a winner on election night. Nonetheless, candidates might prematurely declare themselves a winner and call for vote counting to stop. Delays in reporting results are normal, not voting fraud, and it may take days for states to tally results.

- Individual voting glitches misrepresented as widespread fraud. With tens of thousands of polling places across the country, unexpected things can happen. A power outage, a broken machine or a burst water pipe are just a few. Expect any of these kinds of individual anomalies to be weaponized and spread as evidence of cheating or fraud.

NewsLit Tip: False claims often go viral when they evoke an immediate emotional reaction, especially during a high-profile event like Election Day. Remember to slow down, stay skeptical, and check viral claims against credible sources before sharing sensational content online.

For even more disinformation trends, check out our new prebunking guide, a collaboration between the News Literacy Project and the Mental Immunity Project.

Interested in learning more about the methodology we use to collect election-related falsehoods? Visit the Misinformation Dashboard and click on “Where we get our data.” You can also contribute: Use this form to submit a false claim that is not yet in the dashboard.

Related columns:

Track the trends: Candidate fitness and election integrity rank high in rumors

The News Literacy Project is tracking presidential election misinformation trends online. As voting day nears, let’s examine some of them, so we can make sure to cast our ballots based on facts, not falsehoods.

Fitness for the Job

The most common election misinformation narrative is that a candidate is unfit for the job.

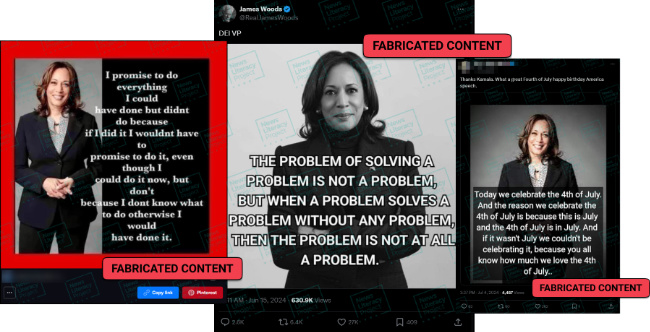

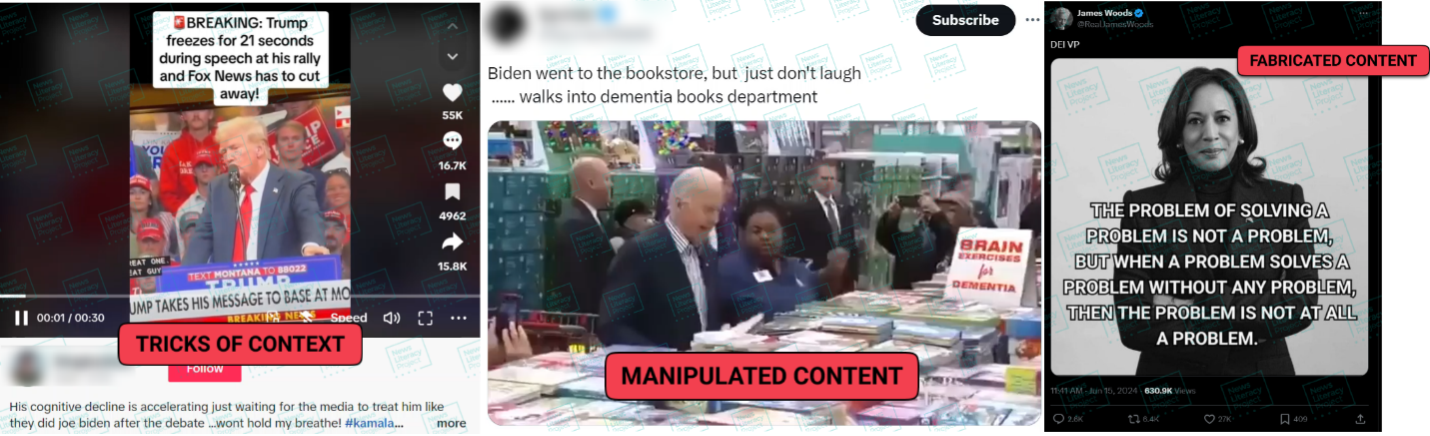

False claims targeting Vice President Kamala Harris paint her as unintelligent or intoxicated. These falsehoods spread through a variety of tactics: short segments of her speeches presented out of context; video of her speaking slowed down to make her words sound slurred; voice actors used to create realistic parodies and quotes fabricated out of thin air. All were presented online as genuine. Be on the lookout for similar claims being pushed with these tactics.

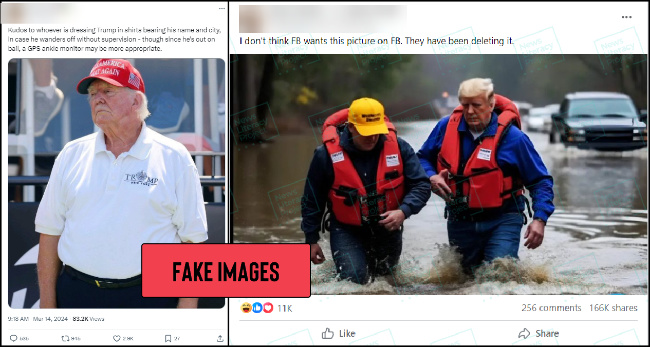

Former President Donald Trump’s mental acuity also is a focus of false attacks, and his physical appearance is frequently distorted on social media.

The image on the left circulated widely among Trump’s critics and the one on the right among supporters, but neither accurately depicts Trump. On social media, people can easily get siloed into feeds that provide a politically slanted view — one that feels “right” for the way it resonates with existing personal biases.

NewsLit Tip: NLP’s Misinformation Dashboard: Election 2024 contains numerous misinformation examples that involve distortions related to a candidate’s physical and mental fitness. These falsehoods tend to reinforce preconceived beliefs, so if you have ever thought to yourself, “It might as well be true” after encountering a provocative claim about a candidate, pause for a moment. Reassess your thinking to be sure your opinion of a post is based on accurate information and not your political leaning.

Casting doubt on casting votes

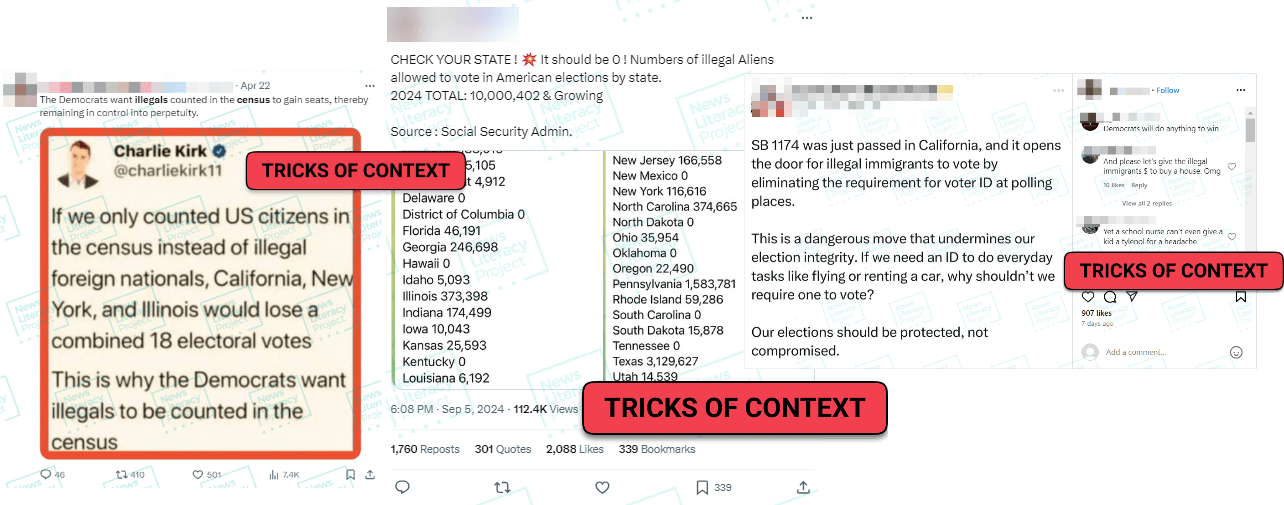

A large portion of the claims in the database target the election itself, with falsehoods attempting to cast doubt about the results. These falsehoods rehashed debunked rumors from 2020, presented standard election procedures out of context, and misconstrued voter registration data. But the most common false narrative in this category is that noncitizens are able to vote.

Noncitizens are not legally allowed to vote in the presidential election and there is no evidence to support these voter fraud claims.

False claims about election fraud are bound to spread in the days leading up and following Election Day on Nov. 5, 2024. Be sure that you are getting your information from reputable standards-based sources. And, if you see a shocking claim from an individual account, make sure to check it against standards-based news reporting.

Interested in learning more about the methodology we use to collect election-related falsehoods? Visit the Misinformation Dashboard and click on “Where we get our data.” You can also contribute: Use this form to submit a false claim that is not yet in the dashboard.

Related columns:

Track the trends: Disinformation disguised as ‘breaking news’

Welcome back to our blog series focused on the Misinformation Dashboard: Election 2024, a tool for exploring trends and analysis related to falsehoods regarding the candidates and voting process.

Hours before the June presidential debate between former President Donald Trump and President Joe Biden, an X account posted a “breaking news” story claiming that CNN would implement a one- to two-minute live delay to edit footage before broadcasting it.

The claim was entirely made up, and the word “BREAKING” was used to pass it off as a credible news report. It worked. The post amassed more than 2 million views.

Standards-based news outlets use terms like “breaking news” to signal important developments in an ongoing story. But these alerts have been co-opted by purveyors of disinformation to create fake urgency and spread “breaking” news stories that are neither breaking nor news.

Watch this video compilation of some of the fake “breaking news” we’ve encountered about the election:

The Sheer Volume of Sheer Assertions

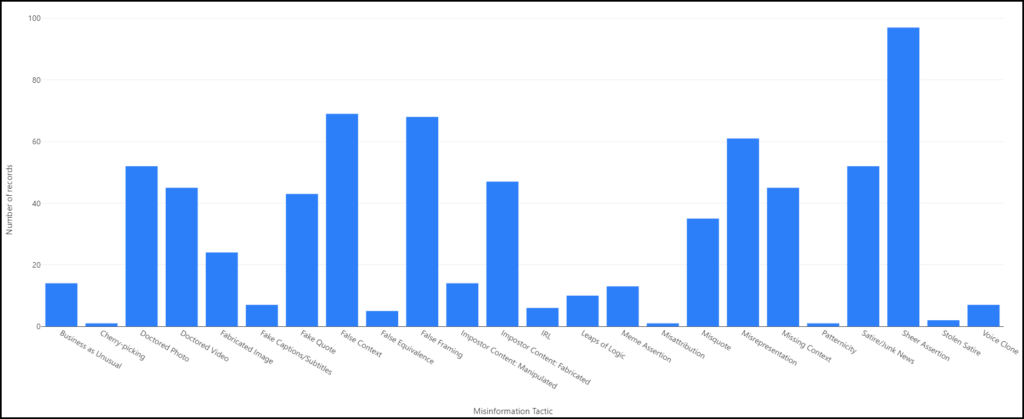

NLP’s Misinformation Dashboard: Election 2024 catalogs examples of election misinformation — tracking trends, types and themes. While propagandists have ample tools to create false and misleading content, the most widely used tactic is simply to conjure false claims out of thin air. These “sheer assertions,” as they’re known, make up about 15% of all claims in NLP’s database.

Data from NLP’s misinformation dashboard shows that “sheer assertion” is the leading tactic used to spread misinformation.

On social media, sheer assertion claims about breaking news events are among the fastest to surface and are published recklessly, before all the facts emerge. Journalists report breaking news by interviewing sources, checking data, verifying facts and updating coverage as needed. On the other hand, charlatans — many of whom claim to be doing “citizen journalism” — co-opt journalism lingo to push out baseless speculation or fabrications in mere seconds.

Newslit tip

Social media posts with breaking news language — including “breaking,” “developing,” and “exclusive” — warrant careful examination. Investigate an account’s profile to see if it is connected to a credible news outlet or has a history of publishing accurate information.

Don’t be misled by use of journalism terminology to make these alerts seem authentic. They miss two important aspects of credible reporting: multiple sources and evidence.

As Mark Twain once never said: “A lie can travel halfway around the world while the truth is still putting on its shoes.” Reliable information develops slowly during a breaking news event. Remember that bad actors attempt to fill that void with false claims.

A claim is not evidence

Along with sheer assertions, there are other rumor tactics tied to breaking news events:

- Sensational claims attributed to a single, unnamed “source.”

- Generic photographs that don’t support the claim or add to the supposed story.

- Nonsensical accusations that the media is “ignoring” a story or that a “blackout” has been issued to prevent coverage.

- False attributions to credible news outlets.

Doing real journalism is difficult and takes time. But producing falsehoods of almost any sort is comparatively easy and quick. But of all the tactics people use to spread misinformation, pushing out evidence-free assertions might require the least amount of effort. While this tactic is popular, it is also easy to spot and debunk. Just remember to check your sources, look for evidence and slow your scroll on social media to allow time for credible information to emerge.

This is part of a limited email series that analyzes trends and offers analysis based on the News Literacy Project’s Misinformation Dashboard: Election 2024. Subscribe to the series here.

Related columns:

Track the trends: Is it AI or is it authentic?

Welcome back to our blog series focused on the Misinformation Dashboard: Election 2024, a tool for exploring trends and analysis related to falsehoods regarding the candidates and voting process.

The prevalence of content generated by artificial intelligence has made it more difficult to differentiate between fact and fiction. Widely available AI tools allow people to create photo-realistic but entirely fabricated images, but how often are these fakes being passed off as genuine and used to spread misinformation and what can we do to identify and debunk them?

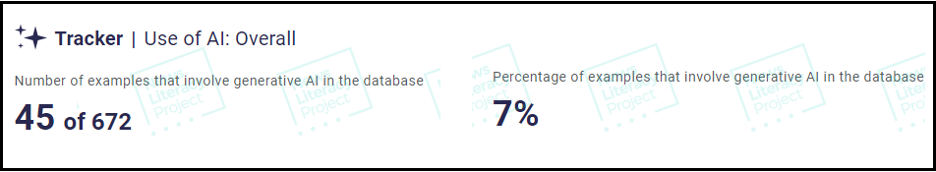

One of the goals of the News Literacy Project’s Misinformation Dashboard: Election 2024 is to monitor the use of AI-generated content to spread falsehoods about the presidential race. Now, with about 700 examples cataloged, let’s look at the impact.

Not the main source of misinformation

Researchers have long warned that AI-generated content could release a flood of falsehoods. And while there has certainly been an uptick in these fabrications, their use in election-related rumors has not been as widespread as predicted. NLP has found that AI-generated content has been used in only about 7% of the examples we’ve documented.

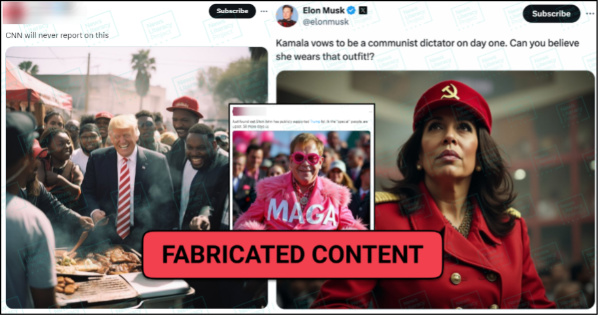

Completely fabricated images of former President Donald Trump, President Joe Biden or Vice President Kamala Harris make up most of the AI-generated content in NLP’s database. These digital creations can be used to bolster a candidate’s reputation — such as fabricated images showing Biden in military fatigues or Trump praying in a church — or to denigrate their character — like fabricated images of Harris in communist garb or Trump being arrested.

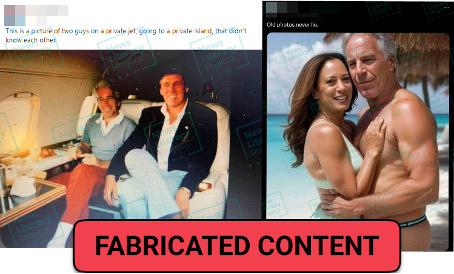

AI can be used to make “photos” that distort a candidate’s relationship with certain groups or people. NLP’s database includes several AI-generated images depicting Trump with Black voters, as well as a number of images that falsely depict candidates posing with criminals or dictators, such as the late convicted sex offender Jeffrey Epstein:

The impact of AI: Question everything

Generative AI technology is behind a concerning trend in how people approach online content: fostering cynicism and “deep doubt.” It has led to a prevailing belief that it is impossible to determine if anything is real. This attitude is now being exploited by accounts falsely claiming that genuine photographs are AI creations.

When enthusiastic crowds turned out at rallies for Harris in August, falsehoods circulated online attempting to downplay the support by claiming that the visuals were AI-generated. This same tactic was used after a photograph emerged that showed a group of extended family members of vice-presidential nominee Tim Walz offering their support to Trump. The photo of Walz’s second cousins — not his immediate family — was authentic, although efforts were made to dismiss it as AI-generated.

Low-tech solutions to high-tech problems

While the existence of AI fakes may make it feel like it is impossible to tell what is real, there is some good news. Whether a photograph is manipulated with computer software, an image is fabricated with an AI-image generator or a piece of media is shared out of context, the methods to identify, address and debunk these fakes remain the same.

- Consider the source. Who is sharing the content, and do they have a track record of posting accurate information in good faith?

- What is the original source of a photo? The lack of a credit is a red flag. Look for supporting evidence. Have any standards-based news outlets also shared the image?

When it comes to AI images, you may not be able to trust your own eyes. Slowing down to consider these key pieces of context is the best way to approach any content online — whether it’s real or created by AI.

Visit the Misinformation Dashboard: Election 2024

You can find additional resources to help you identify falsehoods and recognize credible election information on our resource page, Election 2024: Be informed, not misled.

Related columns:

Track the trends: Stay ahead of these election falsehoods

Welcome back to our blog series focused on the Misinformation Dashboard: Election 2024, a tool for exploring trends and analysis related to falsehoods regarding the candidates and voting process.

As of today, NLP’s Misinformation Dashboard: Election 2024 contains more than 600 hundred examples of online falsehoods. By categorizing them by themes and narratives, we provide important insights about trends and patterns in the misinformation spreading about this year’s presidential race.

The role of repetition

Ever heard the phrase, if you “repeat a lie often enough … it becomes the truth”? It’s often credited to Nazi Joseph Goebbels, one of the most notorious and malevolent propagandists in history. This law of propaganda drives much of the misinformation we find online. Our vulnerability to oft-repeated falsehoods and the “illusion of truth” effect makes it crucial to understand the common themes and narratives of viral misinformation

Candidate’s cognition a common theme

Claims that exaggerate and distort candidates’ cognitive abilities and intellect are the most frequent in our collection. This was true when President Joe Biden was the presumptive Democratic nominee running against former President Donald Trump, with many false claims attacking the candidate’s age. And the trend has continued with Vice President Kamala Harris. It’s important to note that these overall narratives are not entirely fabricated (all three politicians have had their share of public gaffes), but they appeal to our natural desire to confirm our biases, providing an exaggerated and distorted glimpse of reality in which the candidates are reduced to caricatures of themselves. Are Trump and Harris both mentally fit to be president? Our dashboard isn’t designed to answer that. But it can help to make you aware of the spate of misinformation that intends to skew your viewpoint on this question.

You can’t see the forest for the trees

Debunking individual rumors — for example, proving that Trump did not “freeze” during a campaign speech or that Harris did not say that “the problem of solving a problem is not a problem”— only partly succeeds in combating misinformation. Those who create and share misinformation are doing more than just pushing an individual falsehood. They are making a concerted and sustained effort to manipulate our political views by repeating these claims to distort consensus reality, or our shared understanding of the world around us.

We need to prepare ourselves for the inevitable false claims that will fill our news feeds in the lead up to the 2024 election. The best way to do that is to shift our attention from individual posts of questionable content and focus more on broader trends. By learning to identify the false narratives that bad actors attempt to establish about candidates and the election process, we can spot them before they draw us in.

We will continue to add every election-related viral rumor we can find to our collection through Inauguration Day on Jan. 20, 2025. So, you might want to check the dashboard’s running tally of false claims as part of your daily news routine. We also will publish analyses of political misinformation here in the weeks to come.

Track the trends: Get to know the election dashboard

Welcome to our blog series focused on the Misinformation Dashboard: Election 2024, a tool for exploring trends and analysis related to falsehoods regarding the candidates and voting process.

Every day people are bombarded with information on social media, and in this rush of content, false claims can slip by undetected. Developing the skills to identify and address falsehoods is important for every online user, especially in the days leading up to a historic presidential election, when the volume and variety ratchet up, as well as the stakes.

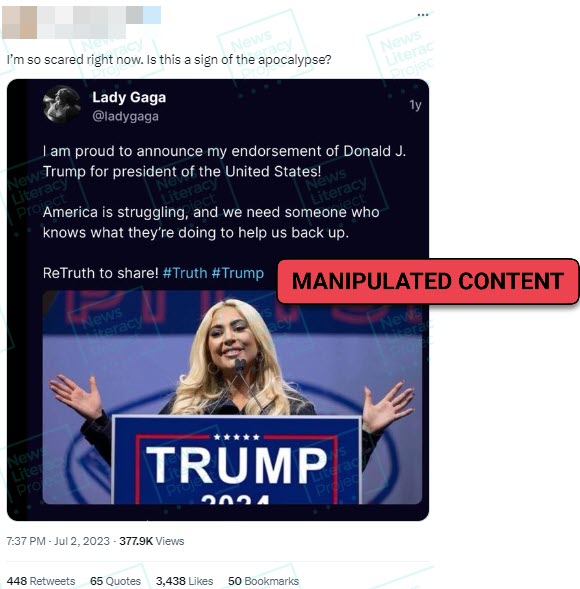

That’s why NLP has launched its Misinformation Dashboard: Election 2024 – an interactive collection of viral election-related falsehoods that we began compiling in July 2023, with a post from an ordinary Instagram account. The shocking image: a screenshot of what appeared to be an endorsement of Donald Trump for president from superstar Lady Gaga.

But it was not genuine. The image was created by doctoring a photograph of Gaga as she addressed a crowd in Pennsylvania during President Joe Biden’s campaign in November 2020. And the alleged quote, well, that was a complete fabrication. Since then, we’ve cataloged more than 500 other false claims aimed at influencing the electorate.

But the dashboard goes well beyond simply compiling examples of false, manipulated and AI-generated content. It identifies common tactics used to create these falsehoods, along with the recurring themes around which they cluster – an approach that we hope helps people be able to better recognize and reject these pieces of misinformation in their feeds.

The Types and Tactics of Misinformation

NLP’s Misinformation Dashboard: Election 2024 is divided into two broad sections: themes and types of misinformation.

Tricks of context have proven to be the most common form of election misinformation online so far — and the most common is the use of “false context,” or presenting an image or video along with text that inaccurately describes it. This tactic is likely popular because it is both effective and easy. False context claims often follow major breaking news events when people are following trending hashtags to get the most recent information. During a natural disaster, it is practically guaranteed that engagement seekers will post years-old photographs or videos and falsely claim that they relate to current events. The same dynamic plays out in political posts. After a campaign rally, for example, it’s not uncommon for images of large, enthusiastic crowds – such as photos of concerts – to go viral as disingenuous depictions of crowd size.

Fabricated content and manipulated content are the two other misinformation types found in the dashboard. While there have been a fair number of fabricated images created with AI-generated software, these technologically advanced falsehoods are still outpaced by less sophisticated counterparts. It is far more common to encounter a sheer assertion – an evidence-free claim that is fabricated out of thin air – or a fictional quote, than an AI-generated image. Manipulating content is another common approach to creating misinformation, with doctored images of T-shirts, signs, and news chyrons among the most common items used in these digital alterations.

Visit the Misinformation Dashboard: Election 2024

You can find additional resources to help you identify falsehoods and recognize credible election information on our resource page, Election 2024: Be informed, not misled.